Projects Overview

This page features some of the projects that I led since my PhD!

Drones for All

ASSETS 2024We explored the potential of using drones as an educational vehicle to help BLV students to learn programming concepts.

A review on Assistive Robots for Blind and Low Vision People

CHI 2025We conducted a systematic literature review to understand what, where, and how robots can benefit BLV users in their daily lives.

LLM-powered Assistive Drone for Blind and Low Vision People

under reviewWe built, as the title suggests,a LLM-powered Assistive Drone for Blind and Low Vision People.

Drones for All: Creating an Authentic Programming Experience for Students with Visual Impairments

Overview

Programming has become a highly sought-after skill in STEM-related studies and careers, but it has only reached a fraction of students with visual impairments. Therefore, there is a need to explore new methods for teaching and learning. This study aims to understand the potential of using drones to create an authentic learning environment to help students with visual impairments learn programming. Based on a month-long engagement with five students with visual impairments, we present insights on using drones to support programming education for students with visual impairments.

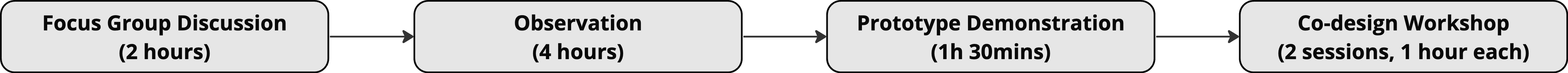

Overview of user study. Each session occurred at least one week after the previous one. The first three sessions were conducted on school premises, while the last one was conducted online via Teams.

Key Insights

Programming is challenging but empowering. We observed that all the students preferred mainstream IDEs and languages, which would be useful for their future career and studies. Drone programming offers them an opotunity to engage in personally meaningful tasks - as they had many ideas on how drones can be programmed into an assistive device to them. Further, all the students were excited and curious to play with cool technology and control a flying robot. Unlike traditional learning schemes with highly visual output, drones increase the physicality of the programming output via multisensory feedback, such as audio and somatosensory feedback.

Human Robot Interaction for Blind and Low Vision People: A Systematic Literature Review

Overview

Recent years have witnessed a growing interest in using robots to support Blind and Low Vision (BLV) people in various tasks and contexts. However, the Human-Computer Interaction (HCI) community still lacks a shared understanding of what, where, and how robots can benefit BLV users in their daily lives. In light of this, we conducted a systematic literature review to help researchers navigate the current landscape of this field through an HCI lens. We followed a systematic multi-stage approach and carefully selected a corpus of 76 papers from premier HCI venues. Our review provides a comprehensive overview of application areas, embodiments, and interaction techniques of the developed robotic systems. Further, we identified opportunities, challenges, and key considerations in this emerging field. Through this systematic review, we aim to inspire researchers, developers, designers, and HCI practitioners, to create a more inclusive environment for the BLV community.

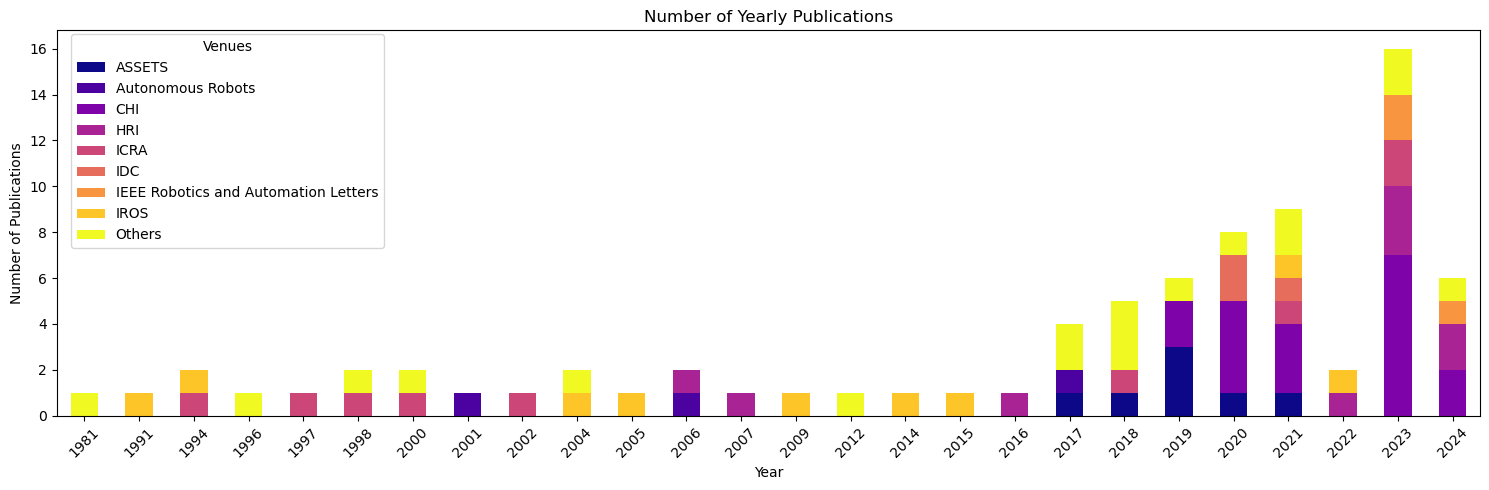

The bar chart shows the number of papers on this topic from 1981 to 2024. The trend shows the number has increased exponentially since 2017.

Key Insights

I'm still working on to summarize the full paper into a few sentences 💪🏻

Meanwhile, to know more, you can read the paper from here: Link

LLM-powered Assistive Drone for Blind and Low Vision People

Overview

Recently, Large Language Models (LLMs) have shown transformative potential to improve Human-robot Interaction, particularly by enabling robots to engage users in flexible dialogue. Drones have emerged as an increasingly popular form of assistive robots for BLV individuals. In this work, we built an LLM-powered assistive drone for BLV users. Through a formative study, we identified envisioned use cases and desired interaction modalities. Then, we took a participatory design approach to build a prototype, incorporating the feedback received from BLV users and 5 domain experts. Finally, we conducted a user study with an additional group of BLV users to evaluate the iterated prototype. The result showed that BLV individuals found the interaction intuitive and acknowledged the potential value an assistive drone could add to their daily lives. This work is contributing to a growing body of research on harnessing the power of LLMs to build assistive technologies to create a more inclusive and accessible world for BLV individuals, and it's currently under review as a full paper.

Implementation

The overview of the hardware components of our system. The hardware components include a Tello EDU Drone that is linked to a ThinkPad Laptop. The user can issue commands via a thumb-sized wireless tactile Flic Button, which is connected to the ThinkPad Laptop via Bluetooth.